Professor Wöhrle's team includes researchers from the Department of Electronic Components and Circuits (EBS) and the Smart Embedded Systems group at the Fraunhofer Institute for Microelectronic Circuits and Systems (IMS). The team is working together to develop innovative hardware and software architectures for future embedded systems using artificial intelligence methods, with an emphasis on medical systems. The collaboration brings together the expertise of both institutions to develop innovative solutions for domain-specific computer and accelerator architectures, as well as the appropriate algorithms and their implementation in software and hardware for applications such as neural implants, biosignal processing, sensor data processing and fusion, artificial intelligence and robotics. The fundamental technologies are developed, simulated and characterized in the EBS department, forming the basis for their transfer into applications and subsequent optimization at Fraunhofer IMS.

Prof. Hendrik Wöhrle

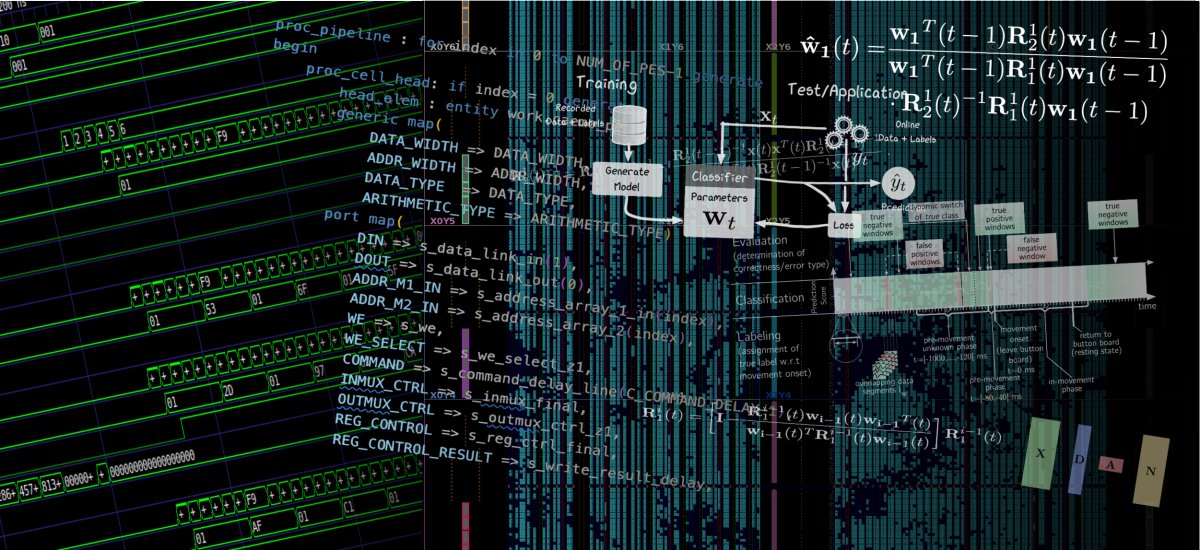

Research focusAI hardware and domain-specific accelerator architectures

The research field of AI hardware and domain-specific accelerator architectures aims to develop new computer architectures that use application-specific calculations as efficiently as possible in embedded systems. Examples of application areas in which this approach is required include artificial intelligence, machine learning (see the keywords “Edge ML” and “TinyML”) and robotics. This field of research involves the development of new, application- and domain-specific computer architectures, which are primarily implemented in digital logic. One focus is also on the development of data flow-inspired models, which represent an application as discrete actors and channels. The aim is to maximize the computing power for a specific application while minimizing power consumption and space requirements.

Research focusEfficient AI models and architectures

The overarching goal of the work carried out in this area is to optimize deep neural networks in particular for their use in embedded systems. As part of this, research is being carried out into how the size of the networks can be optimized for use in embedded systems through quantization, mixed-precision computing, pruning, knowledge distillation or the creation of specific properties such as structural sparsity. Furthermore, we utilize methods from areas such as neural architecture search to conduct Hardware-Software-Algorithm-Codesign and Design Space Exploration to perform a joint optimization of model, model deployment and accelerator.

Research focusAdaptive and Decentralized AI for Robust Learning under Domain Shifts

This research develops adaptive and decentralized AI frameworks to address dynamic and distributed learning challenges. It focuses on enabling models to handle domain shifts by continuously adapting to changing data distributions with minimal supervision. The work emphasizes federated and decentralized learning to ensure scalable, privacy-preserving collaboration across heterogeneous and edge-based systems. Additionally, it explores continual and online learning methods that allow AI models to learn incrementally from streaming data without forgetting past knowledge. By ensuring robustness and efficiency under resource constraints, the research enables AI systems to operate effectively in non-stationary, distributed, and real-world environments such as IoT, autonomous systems, and edge AI deployments.

Research focusTransformers and Post-Transformer models for Edge AI

In this area, we are working on optimizing the latest AI models for use in resource-constrained systems. The focus here is on architectures like the Transformer, which is currently frequently used for large language models (LLMs) as well as newer approaches such as state-space models (e.g. Mamba). The focus here is on application areas such as computer vision and time series analysis (e.g., EEG, EMG) and prediction.

Research focusTrustworthy AI for embedded systems

The use of AI models in embedded systems often requires the reliable generation of outputs or at least precise information on the uncertainty of the model outputs. In this regard, methods from the fields of explainable AI and uncertainty quantification are used to enable the use of AI models, including in safety-relevant application areas, such as medical devices.

Projects

BMBF-Project DI:FEntwumS

The objective of the BMBF-funded project DI:FEntwumS is to develop an open-source toolchain for field-programmable gate arrays (FPGAs) that will facilitate the creation of sophisticated system-on-chip designs. The objective is to expand and supplement existing open-source tools such as Yosys with new tools for visualization, analysis and machine learning integration. The aim is to establish a coherent, user-friendly development environment offering transparent and efficient hardware and software solutions. The focus is on optimizing synthesis through machine learning-supported design space exploration, developing SoPC tools for hardware-software coupling and supporting neural networks through automated provision of FPGA solutions.